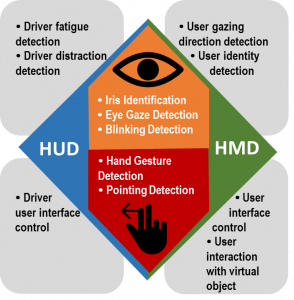

The natural user interface sensing platform focuses on information extraction from human eyes and hands.

Based on the captured eye image sequence, eye related information such as user’s eye gazing direction, iris information, blinking frequency can be obtained. By applying the extracted eye information into the HUD platform, we can make warning if we detect the user is not in a proper driving condition, e.g. in a drowsing or distracted condition. By applying the extracted eye information into the HMD platform, we can determine user identity for login or online payment. By analysing user gazing direction, we can also determine user intension and concentration level for applications such as gaming or education.

Based on the sensed hand and finger image sequence, user gesture, pose and pointing direction can be determined. By applying the extracted hand or finger information into the HUD platform, driver is able to take control on the HUD by simple gestures such as flipping right/left. By applying the hand information into the HMD platform, it is easy for user to input into the user control interface and make interaction with displayed virtual object.

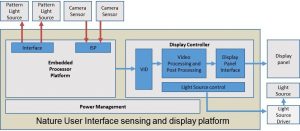

To capture the eye image and finger image efficiency, a dedicated hardware processing platform is integrated to control the synchronization between external light source and camera sensor. The hardware processor platform processes the incoming camera data and then performs the real-time analysis for the eye and finger information. With the benefit of all-in-one system, the related information can be displayed on the HUD or HMD system more conveniently and effectively.